No More Paid Endpoints: How to Create Your Own Free Text Generation Endpoints with Ease

One of the biggest challenges of using LLMs is the cost of accessing them. Many LLMs, such as OpenAI's GPT-3, are only available through paid APIs. In this article, we see how to deploy any open-source LLM as a free API endpoint using HuggingFace and Gradio.

Large language models (LLMs) are gaining popularity because of their capacity to produce text, translate between languages and produce various forms of creative content. However, one of the biggest challenges of using LLMs is the cost of accessing them. Many LLMs, such as OpenAI's GPT-3, are only available through paid APIs.

Luckily, there is a smart way to use any LLM for free. By deploying your own LLM on an API endpoint, you can access it from anywhere in the world without having to pay any fees. In this article, we will show you how to deploy any open-source LLM as a free API endpoint using HuggingFace and Gradio.

Benefits of Creating Your Own Text Generation Endpoints

- It can save you money. Paid APIs can be expensive, especially if you are using a large number of requests. By deploying your own LLM, you can avoid these costs.

- Control over your data. When you use a paid API, you are giving the API provider access to your data. By deploying your own endpoint, you can keep your data safe and secure.

- Access to the latest models. By deploying your own endpoint, you can choose the LLM you wish to use.

- Ability to use the LLM capabilities on any device. LLMs require significant resources to run. The API endpoint enables any device connected to the internet to harness the capabilities of the LLM.

Why use Gradio and HuggingFace Spaces?

While there are popular cloud hosting providers like AWS and GCP, their setup process can be complex, and you often need to build your own Flask API. Furthermore, these providers lack free tiers that can handle large language models (LLMs).

Gradio is a tool that makes it easy to create interactive web apps that can be used to interact with LLMs. Huggingface Spaces is a free hosting service that allows you to deploy your machine learning apps to the web.

With the help of a Gradio app's API functionality, we can easily access the Language Model (LLM). We deploy the Gradio app using the free tier of HuggingFace Spaces.

Before we can get started on how to deploy the LLMs, let's create a new space on HuggingFace.

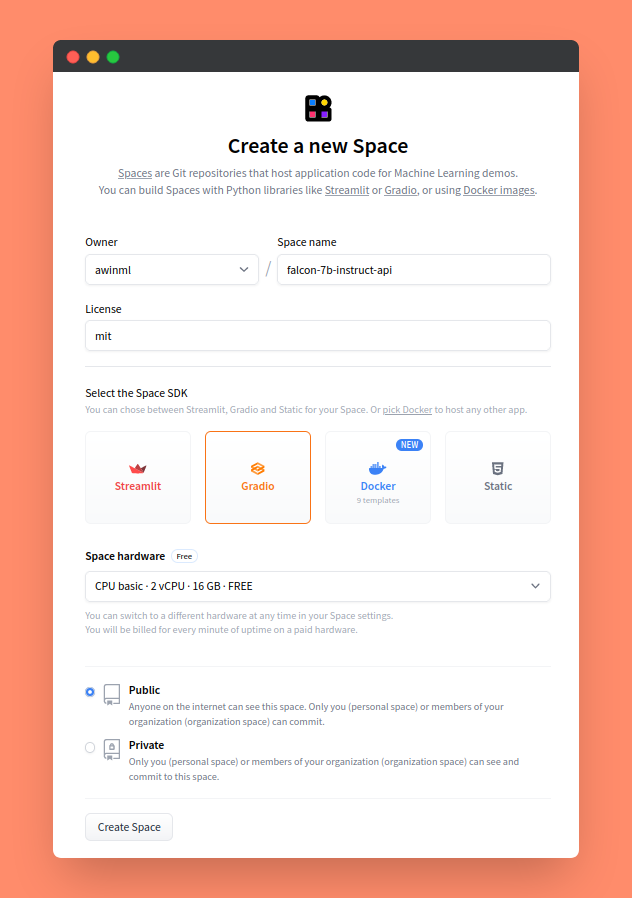

Creating a new Space on HuggingFace

A "Space" on HuggingFace is a hosting environment that can be used to host your ML app. Spaces are priced based on CPU type, and the simplest one is free!

Create a new Space by:

- Go to https://huggingface.co/spaces and click Create new Space.

(You will need to sign-up for a HuggingFace Account to create the space.) - Select the MIT license if you’re unsure.

- Select Gradio as Space SDK.

- Select Public since you want the API endpoint to be available at all times.

Creating the Gradio app to access the LLM

In this article, we create two Gradio apps to access two types of LLM formats:

- A LLM checkpoint available on HuggingFace (the usual PyTorch model)

- A CPU-optimized version of the LLM (GGML format based on LLaMA.cpp)

The basic format of the app is the same for both formats:

- Load the model.

- Create a function that accepts an input prompt and uses the model to return the generated text.

- Make a Gradio interface to display the generated text and accept user input.

LLM from a HuggingFace Checkpoint:

In this example we deploy the newly launched Falcon model using its HuggingFace checkpoint.

To create the Gradio app, make a new file called app.py, and add the following code.

app.py

import gradio as gr

from transformers import AutoTokenizer, AutoModelForCausalLM

import torch

model = AutoModelForCausalLM.from_pretrained(

"tiiuae/falcon-7b-instruct",

torch_dtype=torch.bfloat16,

trust_remote_code=True,

device_map="auto",

low_cpu_mem_usage=True,

)

tokenizer = AutoTokenizer.from_pretrained("tiiuae/falcon-7b-instruct")

def generate_text(input_text):

input_ids = tokenizer.encode(input_text, return_tensors="pt")

attention_mask = torch.ones(input_ids.shape)

output = model.generate(

input_ids,

attention_mask=attention_mask,

max_length=200,

do_sample=True,

top_k=10,

num_return_sequences=1,

eos_token_id=tokenizer.eos_token_id,

)

output_text = tokenizer.decode(output[0], skip_special_tokens=True)

print(output_text)

# Remove Prompt Echo from Generated Text

cleaned_output_text = output_text.replace(input_text, "")

return cleaned_output_text

text_generation_interface = gr.Interface(

fn=generate_text,

inputs=[

gr.inputs.Textbox(label="Input Text"),

],

outputs=gr.inputs.Textbox(label="Generated Text"),

title="Falcon-7B Instruct",

).launch()

This Python script uses a HuggingFace Transformers library to load the tiiuae/falcon-7b-instruct model. The max generation length is set to 200 tokens and the top_k sampling of tokens is set to 10. These text generation parameters can be set as per your requirement. The prompt is removed from the generated text so that the model only returns the generated text and not the prompt plus the generated text.

A requirements.txt file is created to specify the dependencies for the app. The following libraries are included in the file:

requirements.txt

datasets

transformers

accelerate

einops

safetensors

The complete example can be viewed at: https://huggingface.co/spaces/awinml/falcon-7b-instruct-api.

The code for the app can be downloaded from: https://huggingface.co/spaces/awinml/falcon-7b-instruct-api/tree/main.

LLM from a CPU-Optimized (GGML) format:

LLaMA.cpp is a C++ library that provides a high-performance inference engine for large language models (LLMs). It is based on the GGML (Graph Neural Network Machine Learning) library, which provides a fast and efficient way to represent and process graphs. LLAMA.cpp uses GGML to efficiently load and run LLMs, making it possible to run quick inference on large models.

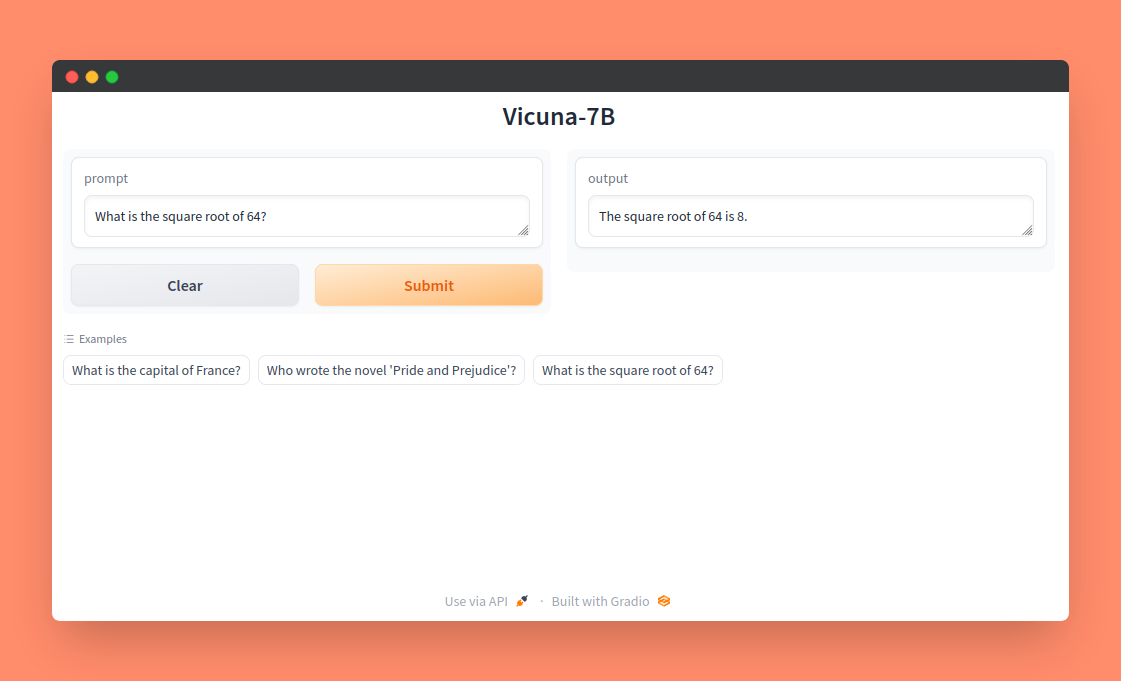

In this example we load the Vicuna model in GGML format and deploy it for inference. The inference time is significantly lower as compared to the model checkpoint available on HuggingFace.

To create the Gradio app, make a new file called app.py, and add the following code.

app.py

import os

import urllib.request

import gradio as gr

from llama_cpp import Llama

def download_file(file_link, filename):

# Checks if the file already exists before downloading

if not os.path.isfile(filename):

urllib.request.urlretrieve(file_link, filename)

print("File downloaded successfully.")

else:

print("File already exists.")

# Dowloading GGML model from HuggingFace

ggml_model_path = "https://huggingface.co/CRD716/ggml-vicuna-1.1-quantized/resolve/main/ggml-vicuna-7b-1.1-q4_1.bin"

filename = "ggml-vicuna-7b-1.1-q4_1.bin"

download_file(ggml_model_path, filename)

llm = Llama(model_path=filename, n_ctx=512, n_batch=126)

def generate_text(prompt="Who is the CEO of Apple?"):

output = llm(

prompt,

max_tokens=256,

temperature=0.1,

top_p=0.5,

echo=False,

stop=["#"],

)

output_text = output["choices"][0]["text"].strip()

# Remove Prompt Echo from Generated Text

cleaned_output_text = output_text.replace(prompt, "")

return cleaned_output_text

description = "Vicuna-7B"

examples = [

["What is the capital of France?", "The capital of France is Paris."],

[

"Who wrote the novel 'Pride and Prejudice'?",

"The novel 'Pride and Prejudice' was written by Jane Austen.",

],

["What is the square root of 64?", "The square root of 64 is 8."],

]

gradio_interface = gr.Interface(

fn=generate_text,

inputs="text",

outputs="text",

examples=examples,

title="Vicuna-7B",

)

gradio_interface.launch()

The app first downloads the required GGML file, in this case the Vicuna-7b-Q4.1 GGML. The code checks if the file is already present before attempting to download it.

We leverage the python bindings for LLaMA.cpp to load the model.

The context length of the model is set to 512 tokens. The maximum supported context length for the Vicuna model is 2048 tokens. A model with a smaller context length generates text much faster than a model with a larger context length. In most cases, a smaller context length is sufficient.

The number of tokens in the prompt and generated text can be checked using the free Tokenizer tool by OpenAI

The batch size is set to 128 tokens. This helps speed up text generation over multithreaded CPUs.

The max generation length is set to 256 tokens, temperature to 0.1, and top-p sampling of tokens to 0.5. A list of tokens to stop generation is also added. These text generation parameters can be set as per your requirement.

A detailed guide on how to use GGML versions of popular open-source LLMs for fast inference can be found at How to Run LLMs on Your CPU with Llama.cpp: A Step-by-Step Guide.

A requirements.txt file is created to specify the dependencies for the app. The following libraries are included in the file:

requirements.txt

llama-cpp-python==0.1.62

The complete example can be viewed at: https://huggingface.co/spaces/awinml/vicuna-7b-ggml-api.

The code for the app can be downloaded from: https://huggingface.co/spaces/awinml/vicuna-7b-ggml-api/tree/main.

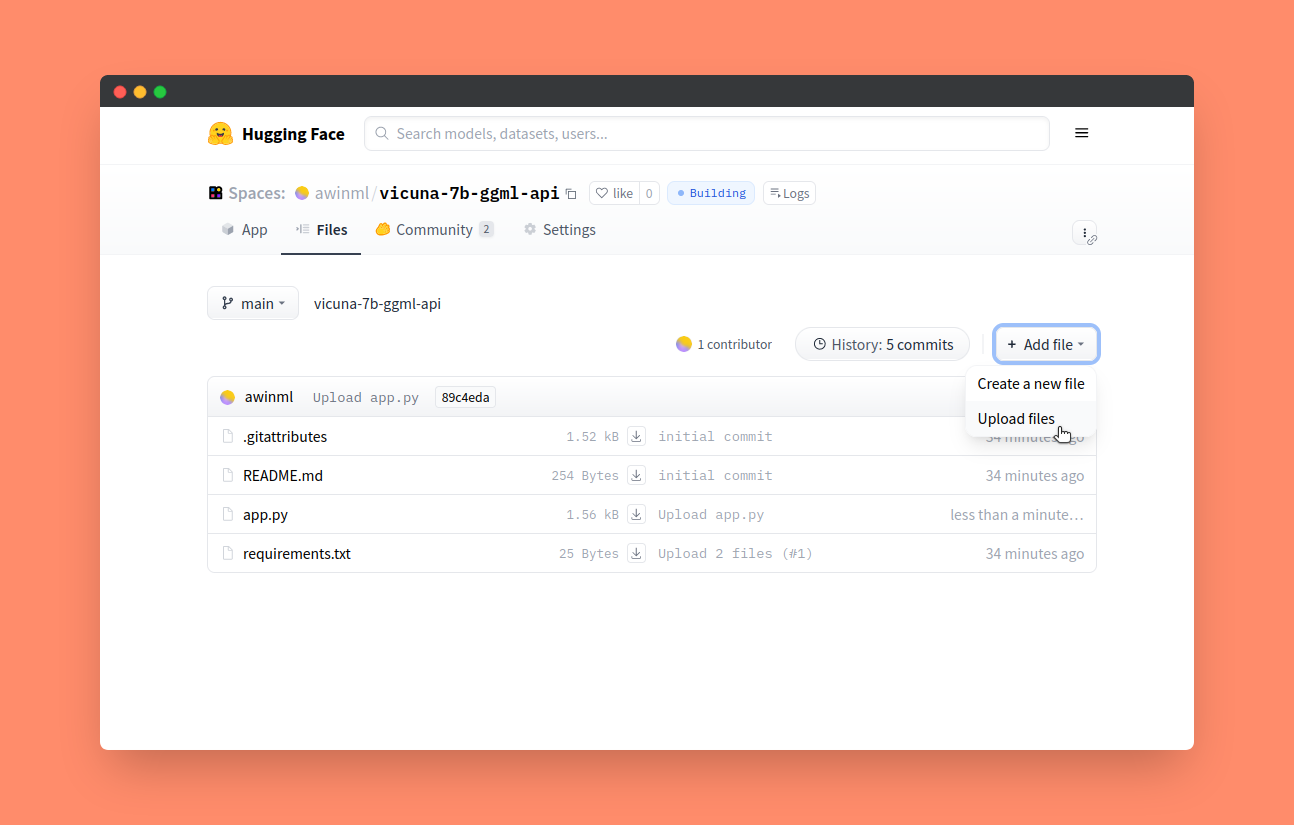

Deploying the Gradio app on HuggingFace Spaces:

Deploying a Gradio app on HuggingFace Spaces is as simple as uploading the following files on your HuggingFace Space:

app.py- This file contains the code of the app.requirements.txt- This file lists the dependencies for the app.

The deployed app will expect you to pass in the input text or prompt, which it’ll then use to generate an appropriate response.

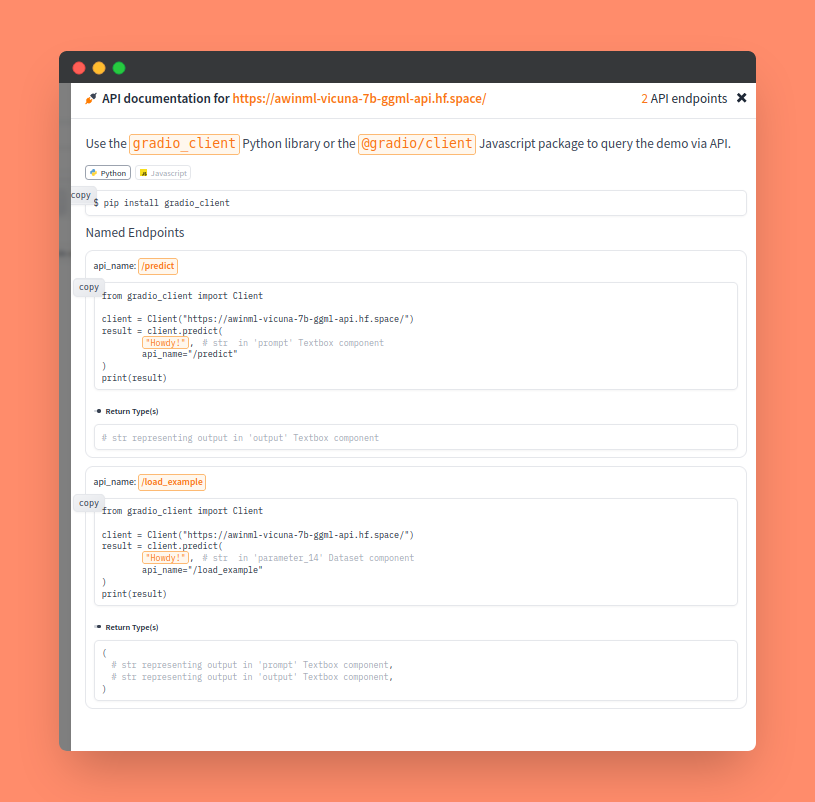

Accessing the LLM as an API Endpoint:

The deployed Gradio app is already running a Prediction (Inference) API endpoint in the background.

The endpoint can be easily accessed through the Gradio Python Client.

At the bottom of the deployed app, you will see a link called "Use via API". Click this link to view the instructions on how to call your app with the API.

To use the API, you will need to install the Gradio Client Python library. You can do this by running the following command in your terminal:

pip install gradio_client

Once you have installed the library, you can use any of the deployed apps for generating text similar to the OpenAI completion endpoints in the following manner:

from gradio_client import Client

# Pass the link to your HuggingFace Space here

client = Client("https://awinml-falcon-7b-instruct-api.hf.space/")

# Pass the Input Prompt to the model

result = client.predict(

"What is the capital of USA?",

api_name="/predict"

)

print(result)

This code will first create a Client object and pass the link to your HuggingFace Space to it. Then, it will pass the input prompt to the model and call the predict() method. The predict() method will return the generated text, which you can then print to the console.

Latency Analysis:

We measure the time taken by the endpoints to generate text based on different prompts. The testing was done on two private endpoints created based on the instructions in the post.

The Falcon-7B model has been loaded using torch.bfloat16 weights using HuggingFace Transformers. The Vicuna-7B model has been loaded using the Q4.1 GGML file using Llama.cpp. The model has been loaded with a context length of 512 tokens instead of the full 2048 tokens.

For both the models, the max generation tokens was set to 256 tokens.The temperature, top_p and top_k values were chosen based on the article and were not the same for both the models. This post is not to compare the text generation performance of both the models.

The following prompts were used to test the latencies of the endpoints:

- What is the capital of Greece?

- Can you help me write a formal email to a potential business partner proposing a joint venture?

- What are the two main differences between Python and JavaScript programming languages?

- Extract five important keywords from the following text: How can governments utilize fiscal and monetary policies to combat economic recessions?

Vicuna-7B:

-

Question: What is the capital of Greece?

Generated Text: The capital of Greece is Athens.

Time taken: 6.1s.

-

Question: Can you help me write a formal email to a potential business partner proposing a joint venture?

Generated Text:

I am writing to express my interest in forming a joint venture with your company. I believe that our complementary strengths and shared vision for the future make this an ideal opportunity for both of us. Our company has extensive experience in [insert relevant industry or field], and we have developed a unique product line that is well-positioned to meet the needs of our customers. We are confident that your company's expertise in [insert relevant area] would be a valuable asset to our partnership, and we believe that together we could create something truly special. I am particularly excited about the potential for collaboration on [insert specific project or initiative], which I believe has the potential to revolutionize the industry. Our teams have already begun discussing the details of this project, and we are confident that it will be a huge success. In addition to our shared goals and complementary strengths, I am also impressed by your company's commitment to [insert relevant social or environmental issue]. As someone who shares these values, I believe that our partnership could make a real difference in the world.

Time Taken: 126.2s

-

Question: What are the two main differences between Python and JavaScript programming languages?

Generated Text:

Python is a general-purpose, high-level language that is easy to learn and use. It has a syntax that is similar to English and supports multiple data types such as integers, strings, and lists. JavaScript, on the other hand, is a client-side scripting language used for web development. It is primarily used to add interactive elements to websites.

Python is an interpreted language while JavaScript is compiled. Python code is executed by an interpreter, which converts the code into machine code that can be run directly by the computer's processor. In contrast, JavaScript code is first compiled into bytecode and then run by a JavaScript engine.

Time Taken: 72.4s

-

Question: Make a list of the top three Morgan Freeman movies. Only specify the movie names.

Generated Text:

1. The Shawshank Redemption (1994)

2. Driving Miss Daisy (1989)

3. Glory (1989)

Time Taken: 16.1s

Falcon-7B:

- Question: What is the capital of Greece?

Generated Text: Athens is the capital of Greece.

Time taken: 61.2s.

- Question: Can you help me write a formal email to a potential business partner proposing a joint venture?

Generated Text:

--------- Did not capture generation. Took too long! ----------

Time Taken: 2650s+

- Question: What are the two main differences between Python and JavaScript programming languages?

Generated Text:

1. Syntactical differences: Python is a higher-level language and has a more structured syntax compared to JavaScript, which is a lower-level language with less strict syntax rules.

2. Use of brackets for function calls: While Python uses the 'def' keyword to define functions, JavaScript functions are defined using brackets or named function literals.

Time Taken: 1524.3s

- Question: Make a list of the top three Morgan Freeman movies. Only specify the movie names.

Generated Text:

1. The Shawshank Redemption

2. Forrest Gump

3. Invincible

Time Taken: 371.3s

Verdict:

The Falcon-7B model is extremely slow to generate text, with the lowest latency being 61 seconds and the highest being over 2,650 seconds. This is clearly not very useful.

I suspect that this is because HuggingFace recently added support for Falcon to Transformers and has not yet optimized the model implementation. This could be because the architecture of the model is still largely unknown. The HuggingFace implementation also relies on external code to run the model, which may be a bottleneck. We may have better luck with other, more mature generative models on HuggingFace.

The Vicuna GGML model, on the other hand, seems to perform extremely well, with latencies ranging from a mere 6 seconds to 126 seconds for the longest generation.

Llama.cpp is being constantly improved, and using a smaller, quantized version may be able to reduce this latency even further. The LLM loading parameters also significantly affect the performance, so optimizing those may also lead to some speedup.

The good thing is that HuggingFace provides no restrictions on the number of spaces that a user can create. This means that multiple spaces can be created and easily used to process requests in parallel.

Based on this, it is quite easy to recommend creating endpoints using the Vicuna GGML model and using it for prototyping applications instead of the expensive OpenAI GPT-3 API.

Conclusion

Now, you can deploy any large language model (LLM) as an API endpoint with just a few lines of code, thanks to Gradio and HuggingFace Spaces. These tools make it simple to build your own free text generation endpoints. By deploying your own LLM on an API endpoint, you can save money by avoiding costly paid APIs while still benefiting from the remarkable capabilities of these powerful language models.